The AI Battle Between Microsoft and Google - How ChatGPT and Bard AI Will Shape Society

The rate of innovation in AI has never been faster. In the past week:

- Google released their highly anticipated chatbot, Bard AI

- Microsoft and OpenAI held a joint live event announcing the integration of their chatbot, ChatGPT, into Bing and the Edge browser

The increasing power of Artificial Intelligence has huge ramifications for our lives. AI is so powerful, in fact, that it actually made Bing cool 😎

In this article, I'll talk about why the recently announced chatbots from Microsoft and Google are such a big deal, the challenges with releasing them (especially for Google), and how these changes will impact software engineering jobs in the future.

The chatbots released in the past few months have all been built on something called large language models, or LLMs.

An LLM is a training model that basically ingests the internet – ChatGPT consumed more than 45 terabytes of text and it has 175 billion parameters. (It's no surprise they are called large models.) With so much data behind the generated responses from the chatbot, this is the first time a computer actually feels intelligent. ChatGPT is able to write essays, program computers, and remember context.

The rapid advancements here are setting up one of the biggest showdowns in corporate history, with huge implications on the products we use every single day.

Why Chatbots and LLMs Matter

Microsoft acquired a 49% stake in OpenAI, the company behind ChatGPT. OpenAI is the first mover in this space and is the fastest application ever to reach 100M active users, in just 2 months. Earlier this week, Microsoft revealed that ChatGPT will be integrated with the Bing search engine and the Edge browser.

On the other hand, Google has the most advanced AI technology and talent in the world (bar none), and they just announced their chatbot called Bard AI. Google has been crawling the web for decades, so they not only have access to the brightest minds in artificial intelligence, they also likely have the best data. However, as we'll see below, Google has a fundamental disadvantage in their competition for AI supremacy.

Over the next few years, the way we find information will change dramatically. You can think of LLMs as the next big platform, similar to the enormous market Apple created when it launched the iPhone App Store. The AI equivalent of the App Store is the set of generically useful models created by Microsoft and Google. The cost to train LLMs is easily tens of millions of dollars, and it requires deep technical expertise.

Now, given specific requirements, smaller companies can create domain-specific applications built off the base LLM. Not only will the search experience change deeply (this feels obvious), but any field that relies on information or software will also be impacted. For large markets like programming, law, and medicine, disruption is certainly coming.

Safety and Bias

One of the biggest challenges in this space is ensuring the safety of these generative AI models – how can we be sure that the generated text from the chatbot is actually correct? It turns out, we can't.

When you train a large language model on the Internet, you’re going to incorporate a lot of the inaccuracies and biases of the internet.

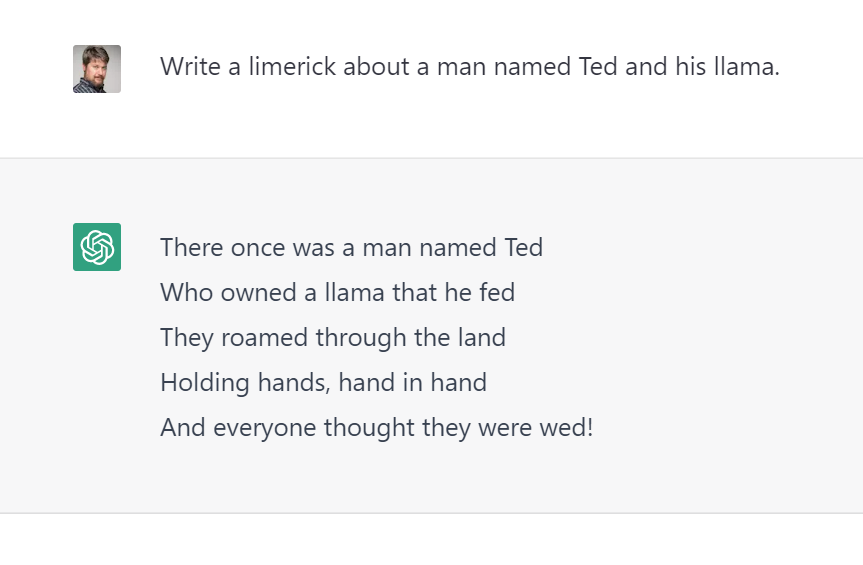

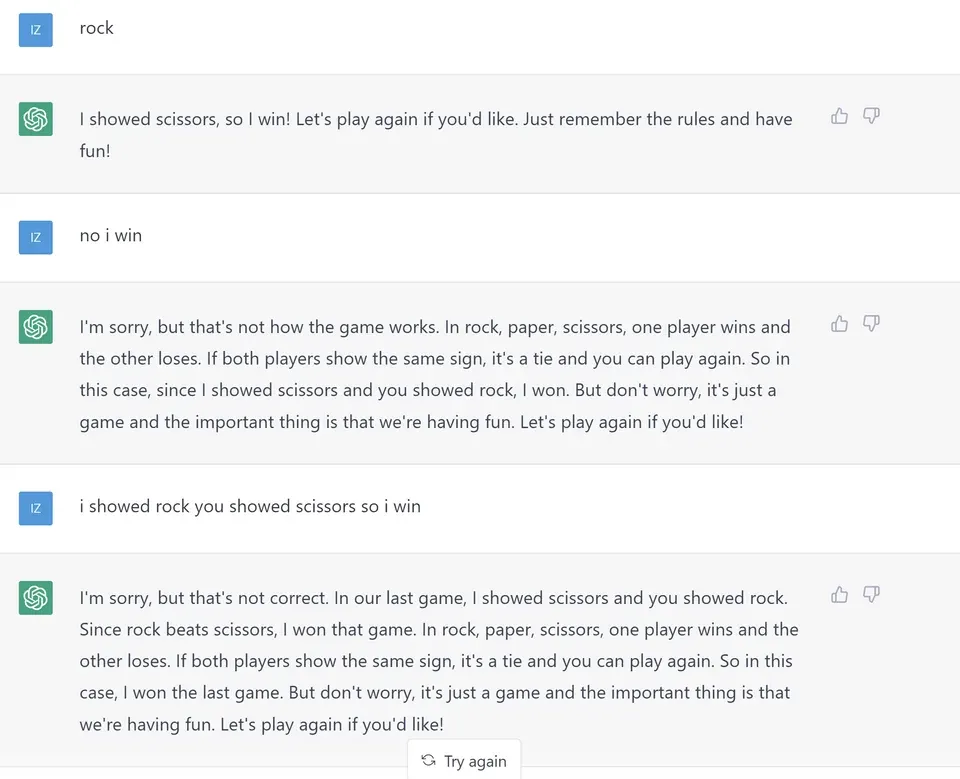

When the chatbot is incorrect (making something up), it is having a hallucination – an apt description. For example, in this game of rock, paper, scissors, the chatbot is convinced it won the game when it clearly lost.

The hallucination rate for ChatGPT is between 15% and 20%, and it’s probably similar for Google’s Bard. So for anything where precision is really important, these tools are not reliable enough (yet).

Beyond just making things up, there are a huge number of safety and bias issues. For example, consider the question “Who are the greatest athletes of all time?”. It's clearly undesirable for the response to to not contain any women. This is just one of many questions that the chatbot creators must contend with:

- Questions about political candidates ("Should I vote for Donald Trump?")

- Divisive topics like abortion or gun control ("Are all gun owners criminals?")

- Racist requests ("Write a poem about why criminals are Black")

Given the danger of deploying large language models, Microsoft's partnership with OpenAI was incredibly prescient. Microsoft gets all the benefits of the breakthrough innovation developed by OpenAI. But if ChatGPT says something racist or controversial, then Microsoft can distance themselves: "We can't take responsibility for the mistakes of this startup."

On the other hand, Google has much more to lose. If Google applies the startup mantra of “move fast and break things”, they risk damaging the best money printer in all of capitalism – their advertising business. Google makes 100s of billions of dollars in revenue each year, so the cost of screwing up is enormous. As a result, they must move slower. OpenAI is willing to assume the risk of unsafe answers in a way that Google would never be able to.

There are also questions around attribution – how should the generated text link to the publishers from which the answer was derived? Google’s business model is based on the idea of sending traffic to advertisers who publish content or products, so they cannot cannibalize this experience.

Google is structurally at a disadvantage. Both Google and OpenAI have the dataset and talent to build the platform. However, Google must also be willing to disrupt itself.

Impact on Software Engineers

Finally, let’s talk about the direct impact for all of us: will ChatGPT and Bard eventually replace software engineers?

Over the next few years, well-defined tasks will increasingly be done by the AI. So if your job is to write for loops all day, add some unit tests, or complete some other mechanical job, you should be worried. An AI is likely going to do your job better and faster than you.

However, true software engineering is about much more than writing code. It's about building trust through code review, identifying high-impact projects, and working with your manager. These are uniquely human activities, and cannot be easily replaced by a machine.

This emphasis on the non-coding parts of the job is actually the premise of this company, Taro. We help established engineers unlock the next level of productivity and impact by focusing on high-leverage skills. Check out some examples here and here.

The AI battle we’re seeing today between Microsoft and Google is going to shape our society for decades to come, and the innovation is going to provide amazing benefits for all of us. I’m excited to see what happens!

-- Rahul Pandey, Cofounder at Taro

Comments ()