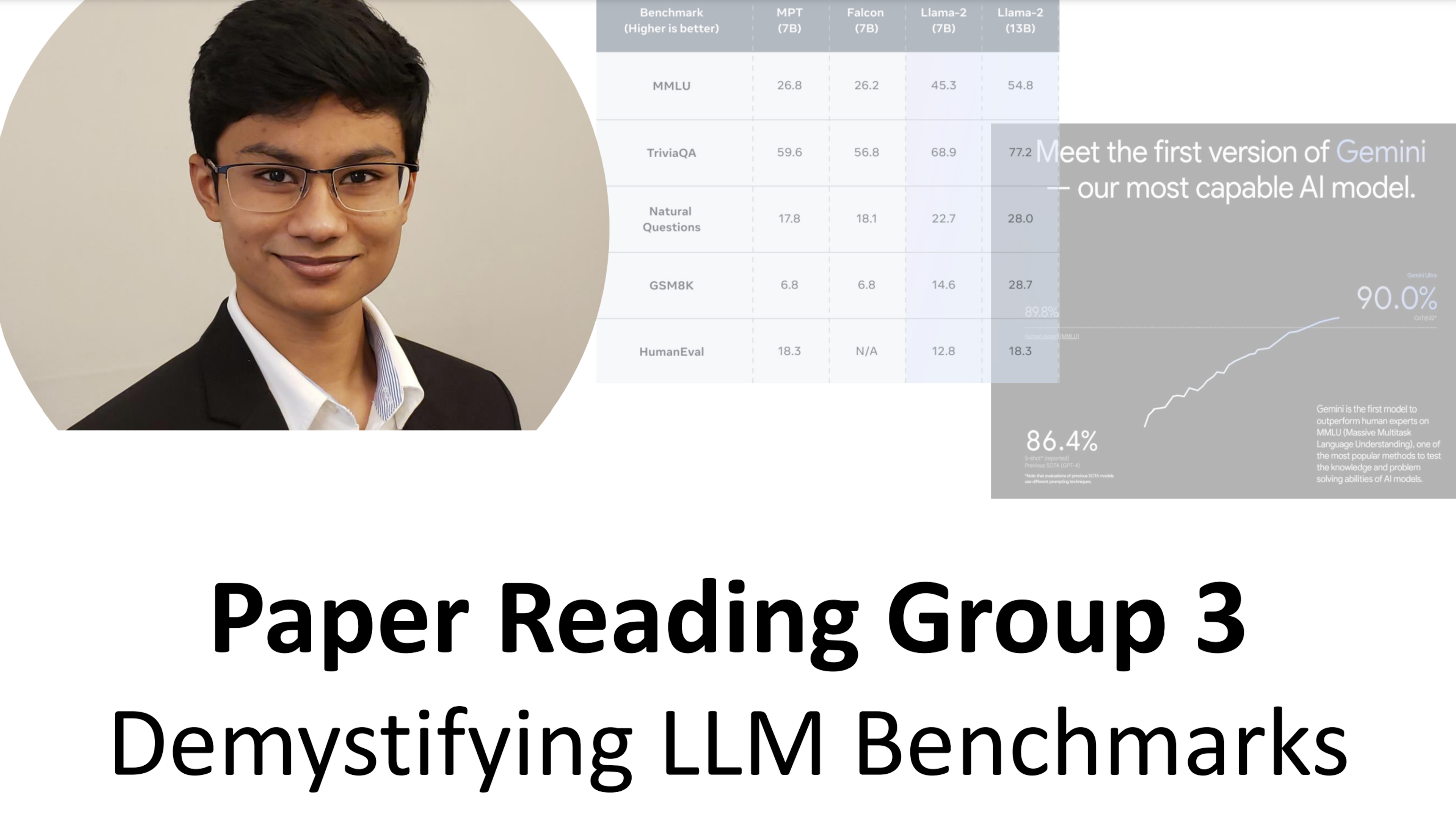

Paper Reading Group 3: Demystifying LLM Benchmarks

Event details

Event description

"Google's Gemini beats human level performance on MMLU" but you might be wondering

- what is MMLU? GLUE? HumanEval?

- How to know which model is good at coding tasks?

- What is considered "human level performance"?

- How do they actually get the results for these benchmarks?

In this talk, Sai will be breaking down all the benchmarks you need to know to be better informed when papers come out claiming to beat benchmarks.

The next paper reading session is on January 8 at 5pm: https://www.jointaro.com/event/paper-reading-group-4-mistral-7b/

Your host:

Sai Shreyas Bhavanasi has worked in Computer Vision and Reinforcement Learning and published 2 first author papers. He has also worked as an MLE and Data Analyst

Event speaker

Explore Jobs By LevelEntry-Level Software Engineer JobsMid-Level Software Engineer JobsSenior Software Engineer JobsStaff Software Engineer Jobs

Explore TrendingLayoffsPerformance Improvement PlanSystem DesignInterpersonal CommunicationTech Lead